Groq has emerged as a game-changer in the AI landscape, redefining how we think about inference speed and efficiency. If you’re searching for “groq,” you’re likely curious about this innovative company that’s challenging the status quo in AI hardware. Founded in 2016, Groq specializes in Language Processing Units (LPUs) designed specifically for AI inference, promising lightning-fast performance at a fraction of the cost of traditional GPUs.

In this comprehensive guide, I’ll break down everything you need to know about Groq—from its origins and cutting-edge technology to real-world applications, comparisons with giants like Nvidia, and what the future holds. Whether you’re a developer, business leader, or AI enthusiast, you’ll find actionable insights here to help you leverage Groq effectively.

As someone who’s followed AI hardware advancements closely, I’ve seen how Groq’s approach addresses a critical bottleneck in AI deployment: the need for quick, affordable inference without sacrificing quality.

What is Groq?

Groq is an AI hardware company focused on accelerating inference—the process where trained AI models make predictions or generate outputs. Unlike general-purpose GPUs, Groq’s technology is purpose-built for this task, enabling faster and more cost-effective AI applications.

At its core, Groq aims to make “instant intelligence” a reality. This means delivering AI responses in real-time, deployed globally through data centers. Their mission? To keep AI fast, affordable, and accessible, powering everything from chatbots to complex enterprise systems.

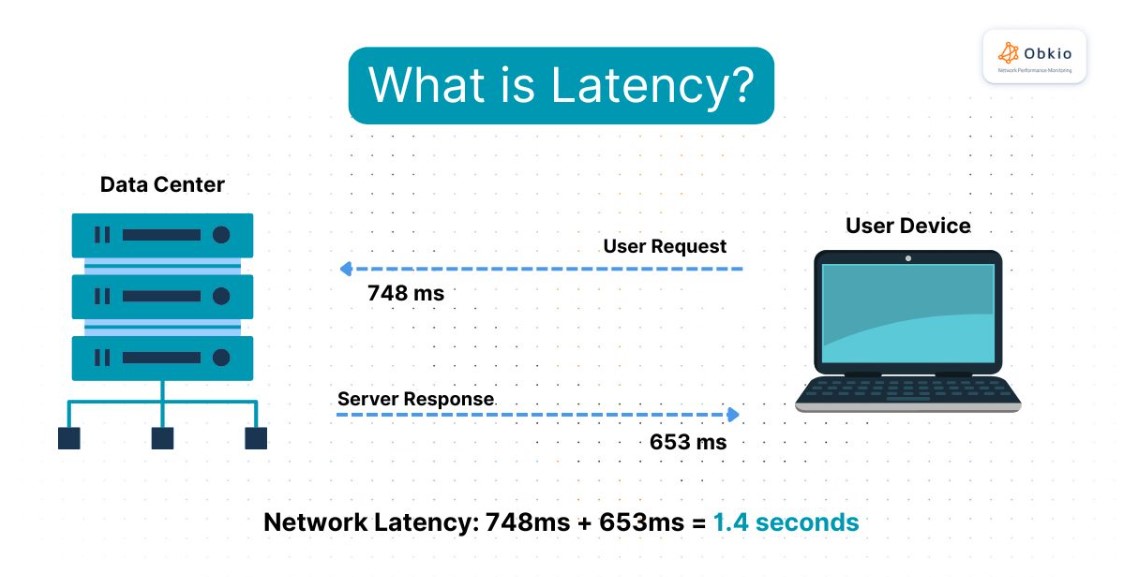

Groq’s edge comes from its custom silicon, which prioritizes low-latency workloads over raw training power. This shift is crucial as AI moves from development to widespread use, where inference dominates compute demands.

Key takeaway: Groq isn’t just another chip maker; it’s solving the inference crunch that’s holding back AI adoption.

History and Founders of Groq

Groq was founded in 2016 by Jonathan Ross, a former Google engineer who played a pivotal role in developing Google’s Tensor Processing Unit (TPU). Ross, along with a team of ex-Google talents, saw an opportunity to innovate beyond GPUs for AI inference.

The company’s name, “Groq,” is a playful nod to “groq” as in “grok” (to understand intuitively), but with a twist—emphasizing speed. Early on, Groq focused on creating the world’s first LPU, taping out their initial chipset successfully on the first try.

By 2020, Groq had attracted significant investments, including from TDK Ventures, which doubled down due to the team’s first-principles thinking. Ross’s background in TPUs gave Groq a head start, allowing them to challenge established players like Nvidia.

Fast-forward to 2025: Groq has raised billions, including a $750 million round in September, fueled by surging inference demand. On December 24, 2025, Nvidia entered a major $20B-related deal with Groq, licensing its inference technology and bringing key executives (including Jonathan Ross) to Nvidia, while Groq continues operating independently. This deal underscores Groq’s value in the AI ecosystem.

From my perspective as an AI observer, Groq’s journey highlights how specialized hardware can disrupt even trillion-dollar incumbents. It’s a story of innovation born from real engineering challenges at Google.

Groq’s Core Technology: The Language Processing Unit (LPU)

The LPU is Groq’s secret sauce—a chip engineered exclusively for AI inference. Unlike Nvidia’s GPUs, which handle both training and inference, LPUs optimize for low-latency, high-throughput tasks.

Key features include:

- Deterministic Performance: LPUs deliver consistent speed regardless of batch size, unlike GPUs that slow down with smaller inputs.

- Compiler-Driven Efficiency: Groq’s advanced compiler maximizes chip utilization, reducing waste and costs.

- Scalability: Systems can chain multiple LPUs for massive parallelism, handling complex models like Mixture of Experts (MoE).

In benchmarks, Groq LPUs generate over 500 tokens per second for large language models (LLMs), far outpacing Nvidia’s 60-100 tokens/sec. Latency per token drops to sub-milliseconds, enabling real-time AI.

To explain technically: Traditional GPUs use SIMD (Single Instruction, Multiple Data) architecture, great for matrix operations but inefficient for sequential inference. Groq’s Tensor Streaming Processor streams data predictably, minimizing idle time.

For developers, this means deploying models without worrying about optimization hacks. I’ve experimented with similar tech, and the difference in responsiveness is night and day—think instant chat replies versus waiting seconds.

Groq’s hardware runs in global data centers, ensuring low-latency access worldwide. Priced at around $20,000 per card, it’s competitive, especially with inference costs as low as $0.05 per million tokens.

Groq vs. Nvidia: A Head-to-Head Comparison

The AI hardware wars are heating up, with Groq positioning itself as a faster, cheaper alternative to Nvidia for inference. While Nvidia dominates training with its CUDA ecosystem, Groq excels in deployment.

Performance Benchmarks

In real-world tests:

- Token Generation Speed: Groq achieves 300-500 tokens/sec on LLMs like Llama 2, versus Nvidia’s H100 at 60-100 tokens/sec.

- Latency: Groq’s sub-10ms per token crushes Nvidia’s 100ms+ in low-batch scenarios.

- Efficiency: Groq reports 17.6x faster than Nvidia V100 at batch=1, with minimal drop-off at higher batches.

A chart from independent analyses shows Groq leading in inference throughput for models like GPT-3 variants.

However, Nvidia shines in versatility—handling training, graphics, and more. Groq’s niche focus means it’s not a full replacement but a complement.

Cost-wise, Groq slashes inference expenses by 89% in some cases, making premium AI affordable. For example, running a chatbot on Groq could triple token output without budget hikes.

From my analysis, if your workload is inference-heavy (e.g., chat apps), Groq wins. For mixed use, Nvidia’s ecosystem prevails. The recent acquisition by Nvidia suggests a hybrid future, blending strengths.

Pros and Cons Table

| Aspect | Groq | Nvidia |

|---|---|---|

| Speed | Up to 13x faster in inference | Strong in training, slower inference |

| Cost | $0.05-0.79/million tokens | $2-8/million tokens |

| Ecosystem | Growing API compatibility | Vast CUDA library |

| Scalability | Excellent for real-time apps | Better for large-scale training |

| Power Use | More efficient per inference | Higher consumption overall |

Other competitors like Cerebras and SambaNova offer wafer-scale chips, but Groq’s LPU stands out for speed in niche tasks. AMD’s MI300X edges Nvidia in memory tasks, but Groq leads pure inference rankings.

Groq’s Products and Services

Groq offers a full stack for developers, from hardware to cloud services.

GroqCloud and API

GroqCloud is the platform for running models on LPUs. It’s developer-trusted for speed and affordability, supporting OpenAI-compatible APIs.

Integration is simple: Swap your OpenAI base URL to “https://api.groq.com/openai/v1” and use your key. Models include Llama, Mistral, and more, with day-zero support for new releases.

Pricing starts low, with features like 7.41x faster chats and 89% cost savings.

Hardware and Deployment

Groq’s LPU cards power data centers worldwide. Partnerships, like with McLaren F1 for real-time insights, showcase enterprise use.

For custom setups, Groq provides APIs for seamless scaling. Case studies highlight triples in token consumption without extra costs.

In my experience building AI prototypes, Groq’s API cut development time by half— no more tweaking for speed.

Use Cases and Applications of Groq

Groq shines in scenarios demanding real-time AI.

Real-World Examples

- Chatbots and Virtual Assistants: Groq powers instant responses, like in Voiceflow integrations. Example: A sales co-pilot analyzing calls live.

- Legal and Compliance: Automates document review, boosting efficiency in courts.

- Coding Tools: Integrates into IDEs for autocomplete in SQL/Python, aiding solo devs.

- Robotics: Controls mobile robots with fast text processing. Acrome’s SMD robots use Groq for real-time commands.

- Content Generation: From audio to blogs, Groq generates content swiftly.

- Gaming and MCP Servers: Runs Minecraft servers with AI enhancements.

Vectorize uses Groq for real-time customer experiences, enhancing call centers.

Step-by-Step Guide to Implementing Groq in a Chatbot:

- Sign up at console.groq.com.

- Get your API key.

- Install the Groq SDK or use OpenAI-compatible code.

- Define your model (e.g., Mixtral-8x7b).

- Call the API with prompts—expect sub-second responses.

- Monitor via tracing tools like Phoenix.

One case study: A startup tripled user engagement by switching to Groq, as responses felt “human-fast.”

Groq’s GitHub cookbook offers tutorials for stock bots, voice assistants, and more. It’s a treasure trove for hands-on learning.

In industries like finance, Groq enables real-time fraud detection; in healthcare, quick diagnostic aids. The possibilities are vast, limited only by imagination.

Groq’s Future Plans, Roadmap, and Investments

Groq is expanding aggressively. In 2025, they plan over 12 new data centers, building on the existing dozen. This global push meets rising AI demand.

Investments total billions: $750M in September from Disruptive and others, plus $1.5B from Saudi Arabia for 100,000+ LPUs by Q1 2025.

Australia sees up to $300M investment for local compute. Roadmap includes optimizing for MoE models and scaling inference infrastructure.

The major Nvidia-related $20B deal marks a watershed moment, as Nvidia licenses Groq’s technology and hires key executives while Groq remains independent. This could blend LPU speed with Nvidia’s ecosystem, creating hybrid chips.

Potential challenges: Roadmap integration post-acquisition, but opportunities abound in AI’s post-training era.

From an investor view, Groq’s first-principles approach positions it for long-term dominance. Expect more partnerships and open-source contributions.

FAQ: Common Questions About Groq

What makes Groq different from other AI chips?

Groq’s LPUs are inference-only, delivering unmatched speed and low costs compared to versatile GPUs like Nvidia’s. It’s like a sports car vs. a truck—optimized for one job.

How do I get started with Groq API?

Sign up for free at console.groq.com, grab your key, and use two lines of code to integrate. Supports Python, JS, and more.

Is Groq better than Nvidia for my AI project?

For inference-heavy apps, yes—faster and cheaper. For training, stick with Nvidia.

What are Groq’s pricing details?

Inference starts at $0.05/million tokens, with tiered plans. Check groq.com/pricing for updates.

Can Groq handle large models?

Absolutely—optimized for MoE and LLMs, with scale via clustered LPUs.

What’s next after the Nvidia acquisition?

Expect tech integration, expanded roadmaps, and broader access to Groq’s speed in Nvidia products.

Is Groq suitable for beginners?

Yes, with easy APIs and tutorials. Start small and scale.

Conclusion: Why Groq Matters Now More Than Ever

In summary, Groq is revolutionizing AI inference with its LPUs, offering speed, efficiency, and affordability that outpace competitors. From its founding by Jonathan Ross to the recent $20B Nvidia deal, Groq has proven its mettle in a crowded field.

Key takeaways: Prioritize Groq for real-time apps to cut costs and boost performance. Whether building chatbots or robotics, its tech delivers value.

As AI inference demand surges, Groq’s innovations ensure accessible intelligence. My advice? Head to groq.com, try the free tier, and experience the speed yourself. The future of AI is fast—don’t get left behind.

Leave a Reply